By Susan Pinker

https://www.wsj.com/tech/ai/does-working-with-robots-make-humans-slack-off-b7b645c1

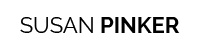

Robots can perform surgery, shampoo someone’s hair, read a mammogram and, of course, drive a car. A chatbot could probably write my column, if I let it. Now that machines can do nearly everything humans do, the question is what effect they have on human motivation. Do they make our lives easier and more efficient, or do they turn us into slackers?

A study published in October in the journal Frontiers in Robotics and AI has an answer: A person who works alongside a robot is less likely to focus on details than when he or she works alone. Anyone who has worked in a team knows that one or two people usually carry the load while the others sit back and watch; researchers call this “social loafing.” It turns out that people treat robots the same way.

The study was led by Helene Cymek of the Technical University of Berlin, who has previously studied social loafing involving human partners. “When two pilots are in a cockpit monitoring the dashboard, they each reduce their effort. [Airlines require] two people because they want to increase safety. They call this the four-eye principle. But we found social loafing,” Cymek said.

For the new study, she and her colleagues recruited 44 volunteers to inspect electronic components for manufacturing errors such as bad welds or seams—a task that, in factories, is often performed by humans paired with robots. The volunteers were divided into two groups. One worked alone, while the other was told to double-check components that had already been inspected by a robot named Panda. People in that group were shown Panda—an articulated arm with a visual sensor on the end—on their way into the lab and heard it humming along as they worked.

But in fact, there was no robot at work. Both groups were deliberately given a set of components that included the same number of mistakes, so if the two groups were giving the same degree of attention to the task, their results should have been roughly the same. Instead, the researchers found that the humans working alone picked up an average of 4.2 out of 5 errors, while those who thought they were being assisted by a robot detected an average of 3.2—20% worse.

It’s not a huge difference, but if you think about quality-control teams where humans and AIs work together on medical imaging or aircraft navigation, it’s clear that the phenomenon of social loafing could potentially carry a high cost. For instance, Cymek notes, it’s known that in mammography screening, it makes a difference if a radiologist is checking an image for the first time or double-checking someone else’s work: “If the person knows it’s been checked first, they slack off.”

This finding doesn’t exactly boost my confidence in self-driving cars, even if there’s a human at the wheel to take over in an emergency. “People take advantage of the support that is offered—they’re over-reliant on the system,” Cymek said. “They look but do not see.”

Appeared in the November 25, 2023, print edition as ‘Does Working With Robots Make Humans Slack Off?’.